You have read about the massive power needs of data centers for AI and cryptocurrencies. Places with cheap power, like Texas, are experiencing a “gold rush” as data centers are built there to take advantage of the resources. This is how Your Survival Guy likes to invest in AI and cryptocurrencies. In The Wall Street Journal, Peter Landers explains the future of powering data centers and what that means for the grid, writing:

Chip-design company Arm ARM -0.62%decrease; red down pointing triangle made its name by devising ways to minimize smartphones’ power consumption and extend battery life. Now, the company’s head says the same push for energy efficiency is needed in artificial-intelligence applications.

Rene Haas, chief executive of Arm, spoke ahead of an announcement Tuesday by the U.S. and Japan about a $110 million program to fund AI research at universities in the two countries. U.K.-based Arm and its parent, Tokyo-based SoftBank Group, are together offering $25 million in funding for the program.

AI models such as OpenAI’s ChatGPT “are just insatiable in terms of their thirst” for electricity, Haas said in an interview. “The more information they gather, the smarter they are, but the more information they gather to get smarter, the more power it takes.”

Without greater efficiency, “by the end of the decade, AI data centers could consume as much as 20% to 25% of U.S. power requirements. Today that’s probably 4% or less,” he said. “That’s hardly very sustainable, to be honest with you.”

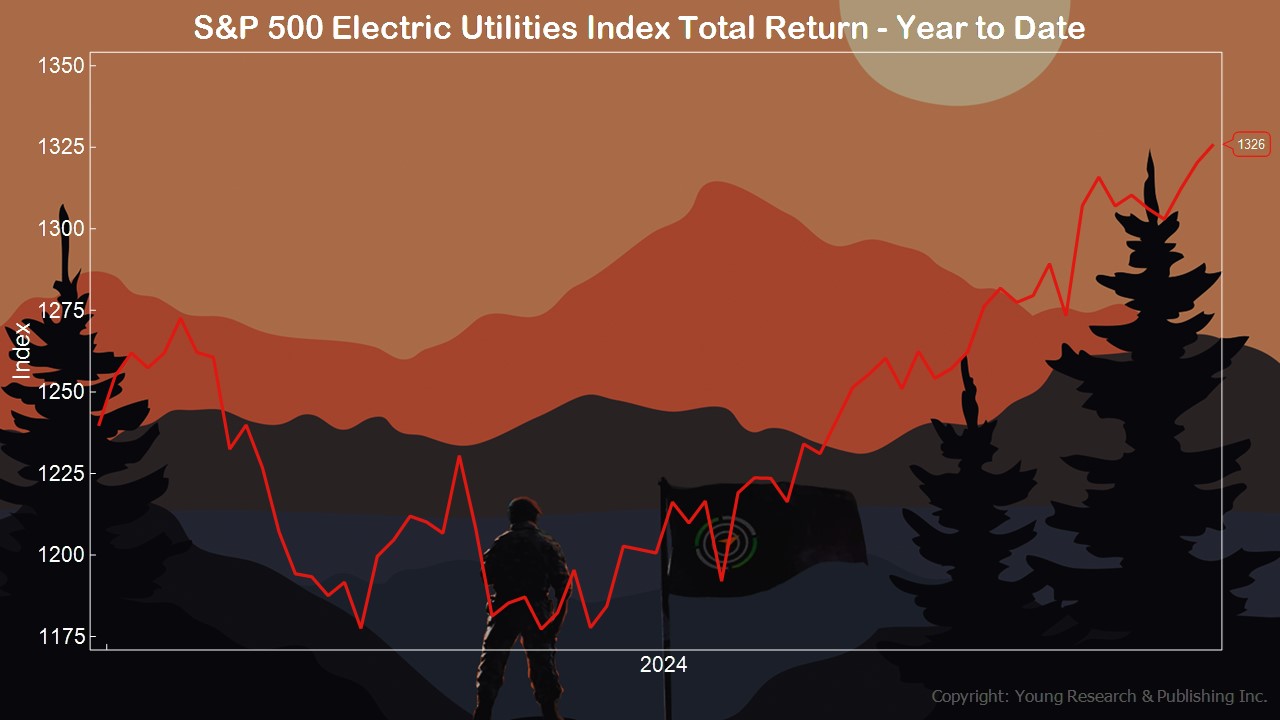

The power issue has drawn growing attention from technology executives in recent months and helped drive up the stock prices of companies that own and operate electric-power plants.

In a January report, the International Energy Agency said a request to ChatGPT requires 2.9 watt-hours of electricity on average—equivalent to turning on a 60-watt lightbulb for just under three minutes. That is nearly 10 times as much as the average Google search. The agency said power demand by the AI industry is expected to grow by at least 10 times between 2023 and 2026.

“It’s going to be difficult to accelerate the breakthroughs that we need if the power requirements for these large data centers for people to do research on keeps going up and up and up,” Haas said.

Action Line: Keep your eye on the demand for power and what that means for utilities and power producers. Click here to subscribe to my free monthly Survive & Thrive letter.